Research snap-shot: questions about Global Workspace Theory

I wrote an intro to some of my thoughts on consciousness here, which was more conceptual and less neurosciency. This post is a snap-shot of some of the current technical questions that are on my mind. Please chime in if you know anything relevant to any of them. This is a pretty high context snap-shot and might not be that useful without familiarity with many of the ideas and research.

Q.1: Is the global neuronal workspace a bottleneck for motor control?

(Kaj's GNW intro, GNW wikipedia page)

Some observations to help build up context for the question and my confusion around it (it ends up being less a question and more a hypothesis I'm asserting).

Observation 1: People have trouble multitasking in dual-task style experiments, but training can improve their performance.

Corollary of 1: Some tasks requires attention and you can't do multiple things that require attention. But if you practice something a lot, you can do it "unconsciously", and you can do several "unconscious" tasks at the same time.

Observation 2: The "conscious bottleneck" seems to come into play during decision making / action-selection when in a novel or uncertain setting (i.e performing an unpracticed and unfamiliar task)

Corollary of 2: The "conscious bottleneck" is a conflict resolution mechanism for when competing subsystems have different ideas on how to drive the body.

I think these are all basically true, but I now think that the implicit picture I was drawing based off of them is wrong. Here's what I used to think: the conscious bottleneck is basically the GNW. This serial, bottlenecked, conflict resolution mechanism really only is used when things go wrong. When two subsystems try to send conflicting commands to the body, or you get sense data that wildly violates your priors. The brain can basically "go about it's business" and do things in a parallel way, only having to deal with the conflict resolution process of the GNW if there's error.

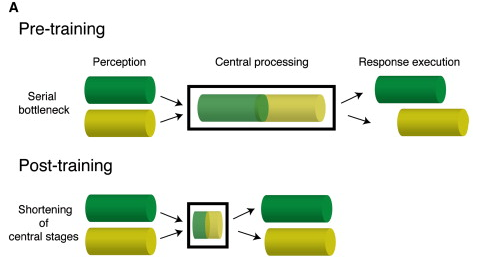

Easy tasks can route around the global workspace, hard ones or ones that produce error have to go through it. That's the previous idea. Now, this paper00458-9?_returnURL=https%3A%2F%2Flinkinghub.elsevier.com%2Fretrieve%2Fpii%2FS0896627309004589%3Fshowall%3Dtrue) has begun to shift my thinking. For a specific set of tasks, it claims to show that training doesn't shift activity away from a bottleneck location, but instead makes the processing at the point of the bottleneck more efficient.

sorta like this

sorta like this

This made me very dubious of the idea that "central conflict resolution mechanism" and "subsystems that have direct access to the nervous system" could coexist. Even though there is some centralized processing in the brain, it looks nothing like a central agent dispatching orders, commanding around other subsystems. This paper, though mostly over my head, paints a pretty cool picture of how the "winner takes all" broadcast aspect of the GNW is implemented in the brain, in a funky distributed way that doesn't rely on a "central chooser".

If subsystems had to route through the GNW to trigger motor actions, then this system or some variation could totally account for the serial conflict resolution function. But if subsystems can directly send motor commands without going through the GNW, how would would subsystems in conflict be "told to stop" while the conflict resolution happens? The GNW is not a commander, it can't order subsystems around. Though it may be central to consciousness, it's not the "you" that commands and thinks.

All this leaves me thinking that I'm either missing a big obvious chunk of research, or that various motor-planning parts of the brain can't send motor commands except via the GNW. Please point me at any relevant research that you know of.

Q.2: Can activity on the GNW account for all experience?

One of the big claims of GNW is that something being broadcast on the GNW is what it means to be conscious of that thing. Given the serial, discrete nature of the GNW, it follows that consciousness is fundamentally a discrete and choppy thing, not a smooth continuous stream.

From having been around this idea for a while, I can spot a lot of my own experience that at first seemed continuous, but revealed itself to be discrete upon inspection. Some advanced meditaters even describe interesting experiences like being able to see the "clock tick of consciousness". So for a while I've been willing to tentatively run with the idea that to experience something is to have that something active on the GNW. But recently while reading the Inner Game of Tennis I was reminded of a different flavor of awareness, one that's a bit harder to reconcile with the discrete framework. Flow, "Being In the Zone", and the Buddhist "no-self". All of these states are ones where you act without having to "think", are intensely in the moment, and often don't even feel like it's "you" moving, it's as if you body is just operating on it's own.

Inner Game of Tennis contrasts this to typical moments when your "self 1" is active and is constantly engaged in judgement and is trying to shout commands at you to produce an outcome. "In the zone" vs "self-aware judgement mode" is probably something most people can relate to. The Buddhist no-self is a bit more intense, but I think it's fundamentally the same thing. Kaj's recent post is an excellent exploration of no-self from a GNW perspective. I think the no-self angle does a better job of exploring the way that the self/ego-mind/"self 1" is a constructed thing that is experienced, and is not actually you. It's the difference between being aware of your breath, and being aware of a memory of your breath, or the thought "I'm aware of my breath".

The constructed ego-mind narrative that get's experienced clearly is discrete and choppy. But is no-self? Is being in a total state of flow in a championship tennis game still an experience that is mediated/bottlenecked by the GNW? I take pause because of the vast difference in how broad my awareness feels when I'm in flow vs in the ego-mind. I take in more of my surroundings, I feel more of where my body is in space and time. It all seems much more high resolution that typical ego-mind consciousness, does the GNW have a good enough "frame rate" to account for it?

There are two main lines of thought I have on how to think of this problem.

Attentional Blink

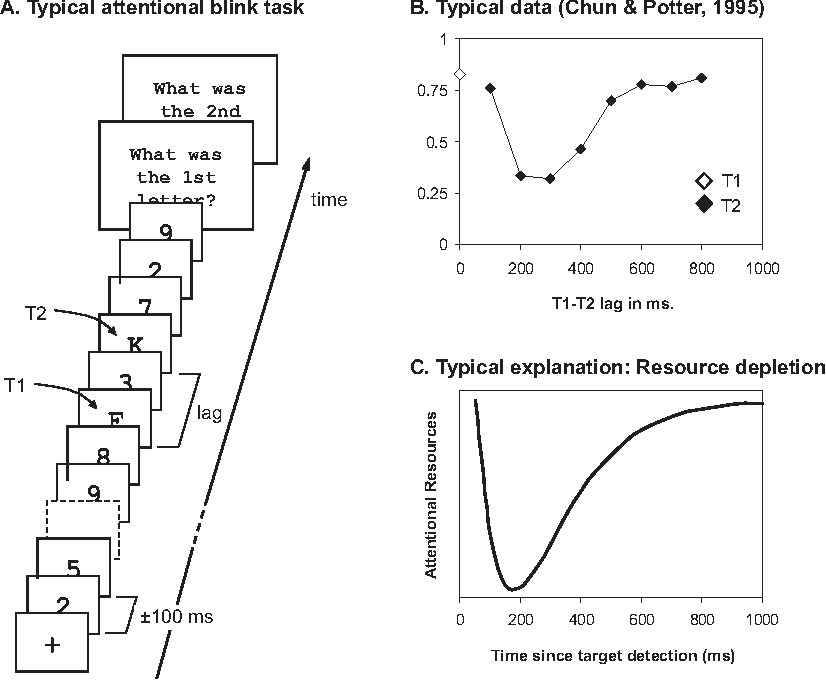

Both the attentional blink and the psychological refractory period seem related to a possible "frame rate of attention/consciousness/GNW". The below image tells you almost everything you need to know about the classic type of attentional blink experiment.

(source)

(source)

You're told to look out for two targets (T1, T2) in a stream of symbols. If the targets are too close together, people fail to see the second target. It's almost as if paying attention to the first target causes a "blink", and you can't pay attention to or process anything else during that blinking period. The attentional blink has often been framed as key evidence for a central bottleneck that has inescapable limit to how fast it can process data.

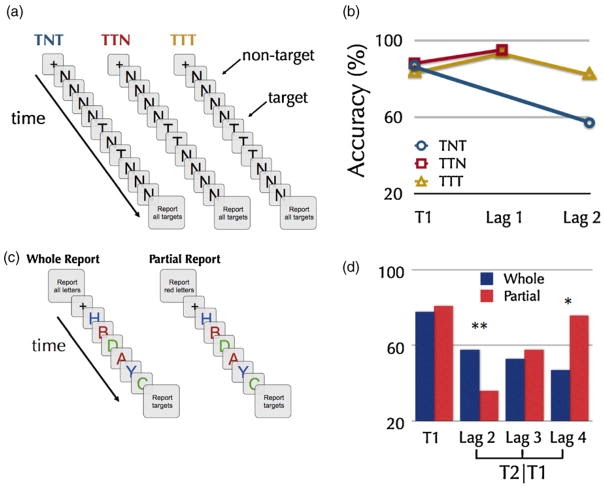

It turned out to not be as simple as I previously thought (here's a lit review that covers shifts in research on the subject). If you put three targets all in a row, people are able to detect them just fine. Additionally, if you ask people to remember the entire sequence they can do better than when you ask them to just remember only some of the characters (up till the point where you max out working memory). This makes no sense if the earlier experiments where interacting with a fundamental processing period that anything being attended to requires.

images summarize the experiments mentioned above, from the previously linked lit review

images summarize the experiments mentioned above, from the previously linked lit review

I found some papers that propose new models of the attentional blink, but haven't had time to explore them, nor have I gotten a sense of if they still relate this phenomena to a central bottleneck, or how the idea of a bottleneck is modified to accommodate these experiments. So I guess this is a temporary roadblock to exploring a "frame rate" idea.

Post hoc unpacking of information, filling in a story of experience with a guess at what was happening

Think back to ego-mind consciousness for a second. It generally maintains the experience that it is a high resolution constant stream of details, but it can be more useful to see it as a system that is flexibly constructing a story about what is happening, and you can "ping" that story to see it's current state. This story is constructed over time; it's not just a direct copy of "what you were experiencing", it can be added to and grown. So when you reflect back on "what was I experiencing 5 seconds ago?" you can find a lot more detail than what was in your experience in that exact moment.

Might there be a similar effect with the experience of flow states?

Ex. I'm in the zone, and I feel like I know exactly where my body is in space in time. My naive interpretation is that this experience is produced by hundreds or thousands of little snippets of somatic info being routed through the GNW. But, what if when I'm experiencing acute awareness of my body, what I'm experiencing is a "active, high alert, and ready" signal being put on the GNW. Maybe the "consciously active information" that makes up this experience is not hundreds of bits of data, but just a "ready" signal. Maybe afterwards when I reflect on the experience, and because of the rich "high alert" state I can draw out a lot of details about where exactly my body was, but in the moment that's not actually what was active in my GNW.

Not married to this one, but I think it's an avenue I want to look more into.

Q.3: How does the predictive processing account of attention play with the GNW account?

(I'm also just generally interested in how these two models can or can't jive with each other. They are trying to explain different things, and so aren't competing models, and yet there's plenty of areas where they seem to both have something to say about a topic.)

Attention for GNW: Working memory is more or less the functional "workplace", and the GNW is the backbone that supports updating and maintenance of working memory, allowing its contents to be operated on by the various subsystems (Kaj's post (wow, I'm linking to Kaj's posts a lot, it's almost like they're amazing and you should read the whole sequence), and the Dehaene paper). Having attention on something corresponds to that something either actively being broadcast on the GNW, or having it in working memory; both are states where the info is at hand accessible.

In predictive processing, attention is a system that manipulates the confidence intervals on your predictions. Low attention -> wide intervals -> lots of mismatch between prediction and data doesn't register as an error. High attention -> tighter intervals -> slight mismatch leads to error signal.

These two notions of what's happening when something is in your attention aren't incompatible, but I don't have a sense of how GNW attention can mediate and produce the functions of PP attention. The metaphors I'm using to conceptualize GNW are quite relevant to this. The GNW seems like it can only broadcast "simple" or "small" things. A single image, a percept, a signal. Something like a hypothesis in the PP paradigm seems like too big and complex a thing to be "sent" on the GNW. How does GNW attention relate to or cause the tightening of acceptable error bounds in a hierarchy of predictive models? If a hypothesis is too big a thing to put on the GNW, then it can't be laid out and then "operated on" by other systems. If coming to attention somehow triggers a hypothesis to adjust its own confidence intervals, what's to stop it from adjusting them whenever? If coming to attention somehow triggers some other confidence-interval-tightening system to interact with the hypothesis, why couldn't it interact with the hypothesis before hand?

Basically I've got no sense of how attention in the GNW sense can mediate and trigger the sorts of processes that correspond to attention in the PP sense. All schemes I think up involve a centralized "commander" architecture, and everything else I'm learning about the mind doesn't seem to jive with that notion. The research I've been doing makes centralization in the brain seem much more "router" like than "commander" like.